Cold War Radar System a Trillion-Dollar Fraud – Lester Earnest on RAI Pt 1/5

Profit and deception drove cold-war militarization, says Lester Earnest, founder of the Artificial Intelligence Lab at Stanford; Earnest says the anti-nuclear bomber SAGE radar system never worked and carried on for 25 years – Lester Earnest on Reality Asserts Itself with Paul Jay. This is an episode of Reality Asserts Itself, produced December 24, 2018.

STORY TRANSCRIPT

PAUL JAY: Welcome to The Real News Network. This is Reality Asserts Itself, and I’m Paul Jay, coming to you from Los Altos Hills, south of San Francisco.

Lester Earnest is one of the fathers of artificial intelligence—a phrase he doesn’t actually like very much. He’d rather call it one of the fathers of machine intelligence, and we’ll get into that soon. He is either the creator or co-creator of the first spell checker, the first search engine, self-driving vehicle, digital photography, document compiler with spreadsheets, social networking and blogging service, online restaurant reviews—something called California Yum Yum in 1973, which gave rise to, amongst other things, Yelp.com—computer-controlled vending machines, a scheme which is now widely used.

Most importantly, he helped found the Stanford Artificial Intelligence Lab in 1966, which has spun off five of the richest companies in the world, which includes Apple, Alphabet—Google, that is—Microsoft, Amazon, and Facebook.

Lester grew up with a right-wing, very Protestant father, who he describes as very bigoted, who hated blacks, Jews, homosexuals, Latinos, and Asians. Lester writes” I continued on my bigoted path until after I voted for Richard Nixon over John F. Kennedy, then figured out that I was on the wrong track, and eventually evolved into a radical socialist who aims to destroy Wall Street and similar piracy organizations around the world.”

This is, I think, a rather unique Reality Asserts Itself, and it will be the story of Lester’s own evolution, his political evolution, and the development of artificial intelligence—or, as I said, what he would rather call machine intelligence—and the roots of the internet in what he calls a massive fraud. And we’ll get into that story soon, as well.

Now joining us from his home is Lester. Thanks very much for joining us.

LESTER EARNEST: Thank you.

PAUL JAY: So before we get into your story, sort of the technological thread of your story, and the beginnings of your work in machine intelligence and such, talk about your childhood. Because this evolution, from growing up as a self-described bigot to where you are now, what’s the atmosphere when you grew up?

LESTER EARNEST: Well, in the beginning, I was essentially a beach boy. Outside of school I only wore a pair of maroon swimming trunks, summer, and winter, and rode a bicycle, and had a great time.

PAUL JAY: You’re born in what year?

LESTER EARNEST: 1930. Born in 1930.

PAUL JAY: And grew up where?

LESTER EARNEST: San Diego. Great beach town. And had a wonderful time, although I was what many people would call a bad boy. I got into a lot of trouble. But as you said, I did start out as a right-wing Republican racist, and it took me quite a while to get over that. I observed that my mom approached people very differently than my dad. She made friends with everyone she met, regardless of skin color, religion, whatever. She was friendly and tried to help anyone she met. My dad was very much like most businessmen of that era; right-wingers. And it took me a while to figure out that he was not on the right track.

PAUL JAY: And you grow up in the ‘40s, during World War II. Into the Cold War in the ‘50s. You internalized, I would assume, all the kind of values of Americanism that are promoted at that time. The Cold War ideas as the war ends and you move into McCarthyism. Would you describe yourself as a believer in Cold War ideas as you join the armed forces?

LESTER EARNEST: At that time, yeah.

PAUL JAY: So as you become a teenager—the war’s over by the late ‘40s, early ‘50s. The Cold Wars in full steam ahead. How would you describe your view of the world, your political view of the world?

LESTER EARNEST: Well, I was still a Republican. And, well, I’d had a hard time in college, in a way. I got into Caltech on a scholarship, but then managed to flunk out twice. But they still let me graduate. And in my senior year, my draft board told me to report for duty in January, which would mean I’d go into the Army, go off to Korea. And that idea didn’t appeal to me. My dad had enough political clout that he got them to flush the draft. But I knew I had to serve time. So I started looking around for alternatives and found a Navy program for which I was perfectly suited. You had to have an engineering degree, which I had just received, and poor eyesight. If you had good eyesight you were not qualified for being a restricted line officer.

So I went in, became an aviation electronics officer, and ended up in a computer research lab doing simulations of manned aircraft and missiles with computers.

PAUL JAY: This is 1953-1954?

LESTER EARNEST: I got to the lab in ‘54, continued through ‘56. And the people I was working with were all mathematicians, no electrical engineers, so they didn’t know how to deal with a computer. I rewired it, making it much more efficient, and had a good time playing with it in various ways. I ran various experiments. Then [finally] got out of the Navy. And in the process, I had learned of advanced computer work going on at MIT that had been supported by the Navy. But then they pulled the plug, and the faculty members at MIT were scratching their heads how they were going to continue with no funding.

So they put together a bunch of alternative proposals, some of which made sense like they proposed building an automated air traffic control system. And they could have done that, but that part of the government wasn’t buying. They did manage to sell to the Air Defense Command the idea of a manned bomber defense. And at that time in the Cold War, our country was undergoing strong paranoia about the possibility of an attack by the Soviet Union.

PAUL JAY: Which later turned out to be a crock, but a lot of people believed it.

LESTER EARNEST: Yeah. Well, they sold this idea to the Congress, who then-

PAUL JAY: Them being MIT sold this …

LESTER EARNEST: Well, it was more Wall Street. The companies that were going to make a lot of money together with MIT convinced them that they should fund this. And the-

PAUL JAY: Again, this being a system of what, missiles that would shoot down airplanes?

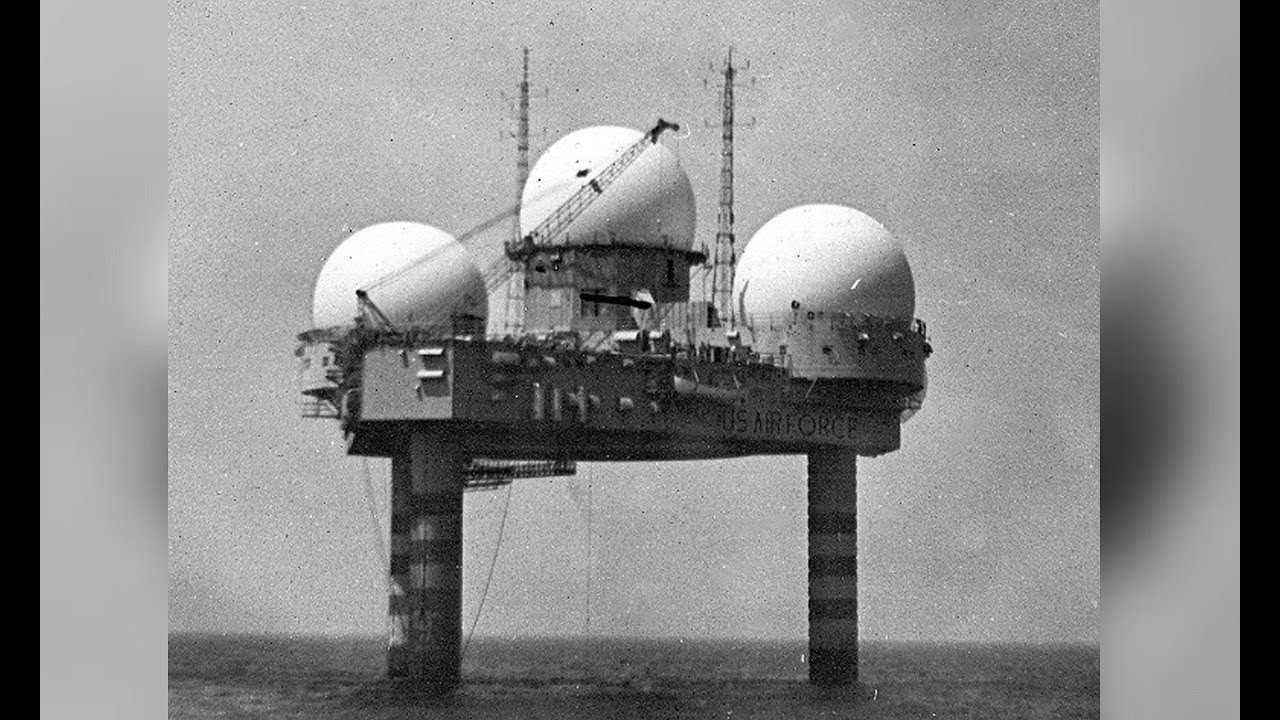

LESTER EARNEST: No. Well, it was, it was a series of radars all across North America that could supposedly track bombers. And then both manned interceptors of various kinds, like Lockheed and [Convair] aircraft. Boeing had a ground-to-air missile system called the Bomarc. And the idea was to then shoot down any incoming bombers.

PAUL JAY: So it was essentially a radar system, is what was being created at MIT.

LESTER EARNEST: Correct.

PAUL JAY: And you get hired to be part of this.

LESTER EARNEST: Yes. They hired me specifically to design the guidance and control system for the manned interceptors and missiles that were going to shoot at the bombers. However, on my first day there, I was assigned to share an office with a fellow who was already working there. This was in MIT’s so-called Lincoln Laboratory in suburban Boston. And I asked him what he did. His name was Paul [Senase]. He said, in a Boston accent, “I work on rada datar.” Dropped the R from ‘radar’ and added it to ‘data.’ Which I found amusing. So I said, what do you do about radar-jamming? He said we don’t do that. I thought he was kidding because if you can’t deal with radar jamming, since all bombers since World War II do it, you’re out of business as an air defense system.

Well, it turns out he was not kidding, as I confirmed shortly. And I kept bringing this up to people at higher levels, and they started holding big conferences trying to figure out a way around this problem, with no success. The problem is that with radar-jamming you get lots of radar data coming in; more than a computer can deal with. So it would just jam up and fail.

Well, they carefully avoided this problem. They were giving demonstrations of this system over time, in which they never—they always used bombers that did not use jamming. And of course, the Russian spies who were observing all of this with instrumentation would know that this was a fake system. But they kept it going for 25 years.

PAUL JAY: This fraud for 25 years.

LESTER EARNEST: Total fraud for 25 years.

PAUL JAY: Because they were spending billions on it. In today’s dollars, anyway.

LESTER EARNEST: Yes. Or maybe even trillions, when you do the money escalation. So-

PAUL JAY: So this is–MIT’s in on this.

LESTER EARNEST: Well-

PAUL JAY: But the military must have known, too; the people that we’re buying this stuff. I mean, they’re spending the money on it.

LESTER EARNEST: Yeah, but they don’t care. The important thing to them is to have a good life; that is, to have a lot of money to do things with.

PAUL JAY: And it also helps justify their existence, that they are developing this wonderful defense system that will protect everyone from a threat that they maybe know isn’t coming.

LESTER EARNEST: Yeah. And they put out propaganda films showing interceptors intercepting bombers, just like people today are putting out films showing anti-ballistic missile systems taking down missiles, which are also bogus. But that’s another story.

PAUL JAY: While you’re there, you’re asked to design how to put a nuclear warhead on a Bomarc missile, which is supposed to shoot down-

LESTER EARNEST: That was a follow-up. We first just had to put both the missiles and the interceptors in the right position to shoot at a bomber to kill it. But then they came up with the idea of adding nuclear warheads to the missile, which was a really stupid idea. But I was assigned the task of convincing the federal government that they should allow it.

PAUL JAY: What year are we in?

LESTER EARNEST: That was 1960. Or maybe ‘59.

PAUL JAY: And who do you talk to, to persuade to put a nuclear warhead on a missile that’s going to blow a plane up over the United States?

LESTER EARNEST: Yeah. And if the plane happened to be flying at a low altitude it would kill everybody on the ground underneath, which is part of why it was a really stupid idea. But that was what they wanted.

PAUL JAY: And you did it.

LESTER EARNEST: I did it. I convinced them-

PAUL JAY: And what does this do to your own belief system? You believed, had faith in this whole hierarchy and system.

LESTER EARNEST: Well, I knew that I was on the wrong track. However, it was, I was being paid well, as were all of the contractors. This was a giant scam. It was very successful. And incidentally, while studying the situation for getting the nuclear warheads approved, we discovered an awkward business. The landlines going from the central computer to the missile launch sites were duplexed. That is, there was a mainline and a backup line in case this one went bad. And at the end, there was a little black box that listened and could tell when a line went bad, and then it would switch to the backup.

But what they didn’t think about was what if the backup goes bad, too? And so in our analysis of the safety of all of this, we discovered that if the backup line is also malfunctioning, it would produce random noise, and then the computer would be listening. And so we calculated on average, how long would it be before it would see a fire command? And the answer was about two and a half minutes.

PAUL JAY: The computer that’s going to tell the missile to get ready to fire-

LESTER EARNEST: Right.

PAUL JAY: -interprets noise on a telephone line in two minutes as a command to get ready to fire?

LESTER EARNEST: Correct.

PAUL JAY: And the thing stands up in the air and gets ready for a …

LESTER EARNEST: That’s right. The missile would erect. But we also were able to prove that it had to get a full set of commands before it would launch; altitude, direction, speed, all that stuff. And we were able to prove that the probability of getting all of that from the noise was negligible. So the effect of all this would be the missile would erect and abort.

So I published a classified report about that titled Inadvertent Erection of the IM-99A.

PAUL JAY: Sounds like a viagra commercial [inaudible]

LESTER EARNEST: Right. And as luck would have it, it happened in New Jersey two weeks after I published that. So then I was put in charge of fixing it, which was pretty easy, to prevent the inadvertent erection.

PAUL JAY: But it shows the lack of working these things through. And there’s already missiles there. I mean, the irrationality of the whole thing.

LESTER EARNEST: Yeah. Well, it was even deeper than that. When we first got a hold of the design of the missile control system, we observe—one of the guys in my group observed that … You, first of all, had to do testing of the electronics periodically to make sure it’s working. So you could put a given site into test mode, and then spray a bunch of commands at the missile guidance system, and you could verify that they were received correctly. And when you finished, you could throw the switch back from test to operate.

However, if you gave the missiles a bunch of commands, and then without clearing them you switched from test to operate, they would all erect and fire. That was in the design. So we pointed that out, and they fixed it in a hurry.

The MIT administration figured out that this thing was a fraud, and backed out in 1958 by pushing all of us who were working on it out the door and into a new nonprofit corporation called Mitre.

PAUL JAY: Which continued this work, and goes on for 25 years.

LESTER EARNEST: Which continued to work for 25 years. And other frauds, as well.

PAUL JAY: All right. In the next segment of our interview with Lester Earnest, we’re going to talk about how this enormous fraud actually helped create some of the knowledge that leads to the creation of the internet. Please join us for the continuation of Reality Asserts Itself on The Real News Network.

END

Podcast: Play in new window | Download